Improving the OCR interface

Implementing Changes:

Improved GUI

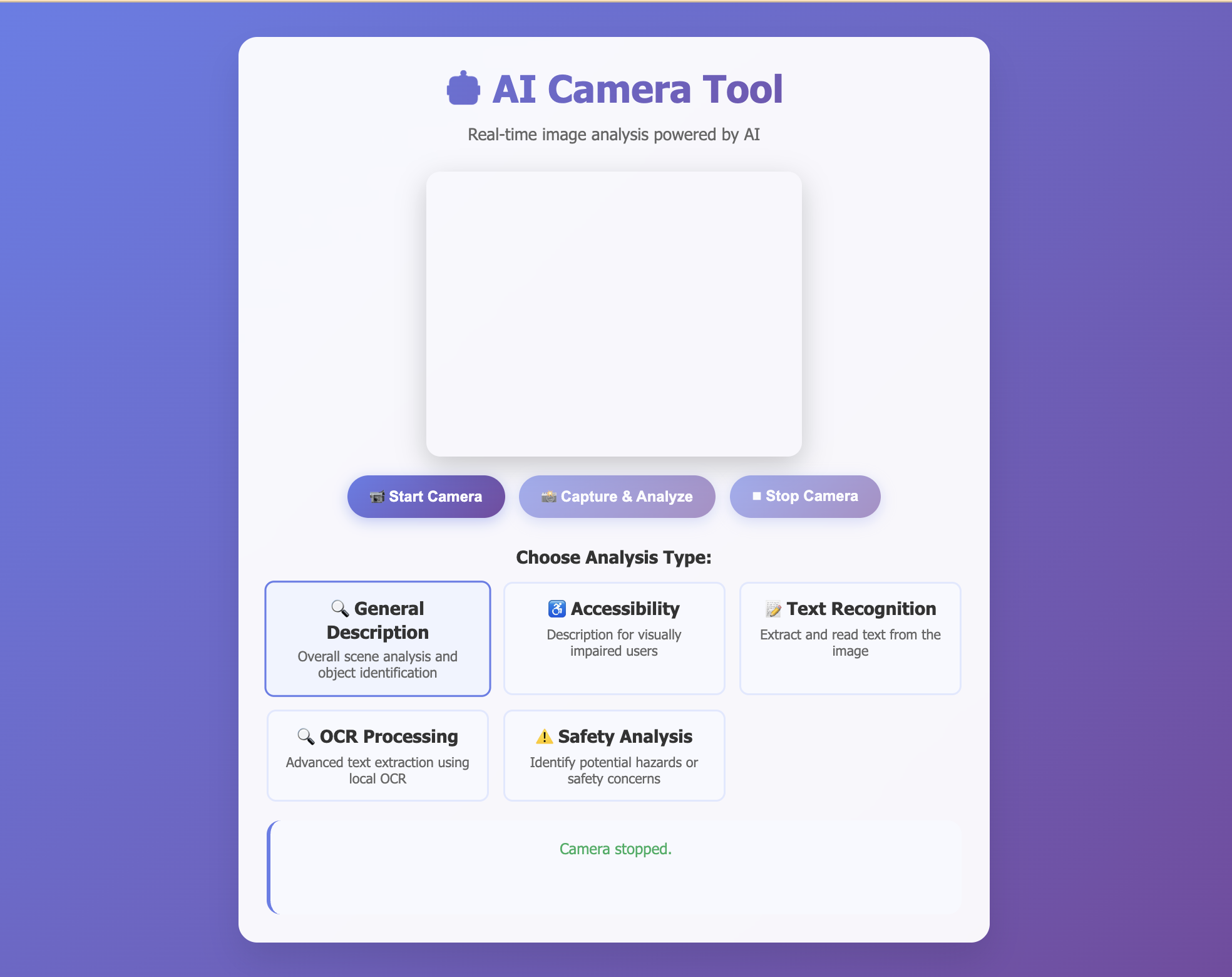

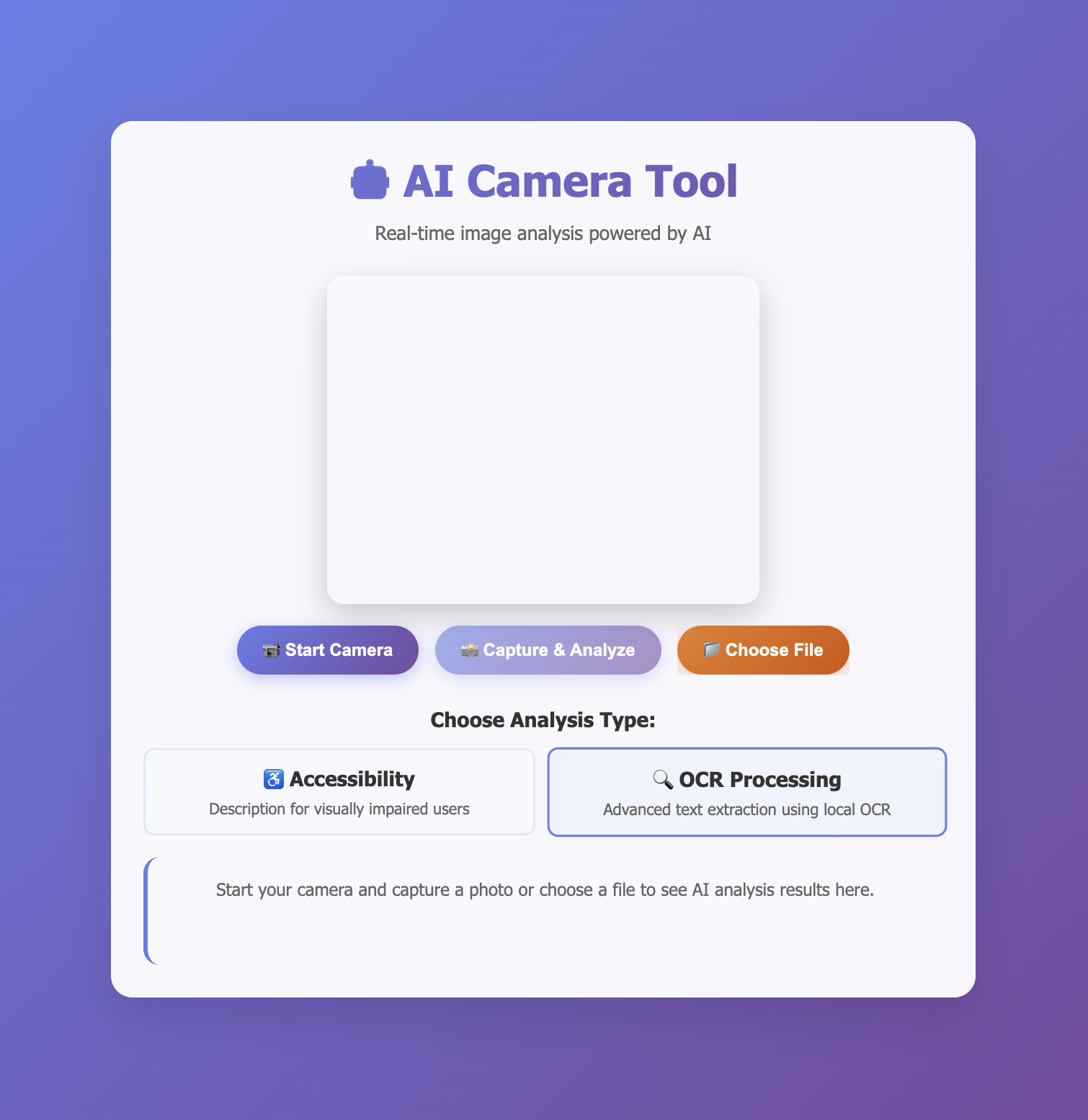

First I wanted to clean up the GUI and general codebase I have made.

This is the repository.

First I changed the index.html file from this:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>AI Camera Tool</title>

<style>

* {

margin: 0;

padding: 0;

box-sizing: border-box;

}

body {

font-family: 'Segoe UI', Tahoma, Geneva, Verdana, sans-serif;

background: linear-gradient(135deg, #667eea 0%, #764ba2 100%);

min-height: 100vh;

display: flex;

align-items: center;

justify-content: center;

padding: 20px;

}

.container {

background: rgba(255, 255, 255, 0.95);

backdrop-filter: blur(10px);

border-radius: 20px;

padding: 30px;

box-shadow: 0 20px 40px rgba(0, 0, 0, 0.1);

max-width: 800px;

width: 100%;

}

.header {

text-align: center;

margin-bottom: 30px;

}

h1 {

color: #333;

margin-bottom: 10px;

font-size: 2.5em;

background: linear-gradient(135deg, #667eea, #764ba2);

-webkit-background-clip: text;

-webkit-text-fill-color: transparent;

}

.subtitle {

color: #666;

font-size: 1.1em;

}

.camera-section {

display: flex;

flex-direction: column;

align-items: center;

gap: 20px;

margin-bottom: 30px;

}

.video-container {

position: relative;

border-radius: 15px;

overflow: hidden;

box-shadow: 0 10px 30px rgba(0, 0, 0, 0.2);

}

video {

max-width: 100%;

width: 400px;

height: 300px;

object-fit: cover;

}

canvas {

display: none;

}

.controls {

display: flex;

gap: 15px;

flex-wrap: wrap;

justify-content: center;

}

button {

background: linear-gradient(135deg, #667eea, #764ba2);

color: white;

border: none;

padding: 12px 24px;

border-radius: 25px;

cursor: pointer;

font-size: 16px;

font-weight: 600;

transition: all 0.3s ease;

box-shadow: 0 4px 15px rgba(102, 126, 234, 0.4);

}

button:hover {

transform: translateY(-2px);

box-shadow: 0 6px 20px rgba(102, 126, 234, 0.6);

}

button:disabled {

opacity: 0.6;

cursor: not-allowed;

transform: none;

}

.analysis-section {

margin-top: 30px;

}

.analysis-types {

display: grid;

grid-template-columns: repeat(auto-fit, minmax(200px, 1fr));

gap: 15px;

margin-bottom: 20px;

}

.analysis-type {

background: #f8f9ff;

border: 2px solid #e1e8ff;

border-radius: 10px;

padding: 15px;

cursor: pointer;

transition: all 0.3s ease;

text-align: center;

}

.analysis-type:hover, .analysis-type.selected {

border-color: #667eea;

background: #f0f4ff;

transform: scale(1.02);

}

.analysis-type h3 {

color: #333;

margin-bottom: 5px;

}

.analysis-type p {

color: #666;

font-size: 0.9em;

}

.results {

background: #f8f9ff;

border-radius: 15px;

padding: 20px;

margin-top: 20px;

min-height: 100px;

border-left: 4px solid #667eea;

}

.loading {

display: flex;

align-items: center;

justify-content: center;

gap: 10px;

color: #667eea;

}

.spinner {

width: 20px;

height: 20px;

border: 2px solid #e1e8ff;

border-top: 2px solid #667eea;

border-radius: 50%;

animation: spin 1s linear infinite;

}

@keyframes spin {

0% { transform: rotate(0deg); }

100% { transform: rotate(360deg); }

}

.error {

color: #e74c3c;

text-align: center;

padding: 20px;

}

.success {

color: #27ae60;

text-align: center;

margin-bottom: 20px;

}

@media (max-width: 600px) {

.container {

padding: 20px;

}

h1 {

font-size: 2em;

}

video {

width: 100%;

height: 200px;

}

.controls {

flex-direction: column;

align-items: center;

}

button {

width: 200px;

}

}

</style>

</head>

<body>

<div class="container">

<div class="header">

<h1>🤖 AI Camera Tool</h1>

<p class="subtitle">Real-time image analysis powered by AI</p>

</div>

<div class="camera-section">

<div class="video-container">

<video id="video" autoplay muted playsinline></video>

<canvas id="canvas"></canvas>

</div>

<div class="controls">

<button id="startCamera">📹 Start Camera</button>

<button id="capturePhoto" disabled>📸 Capture & Analyze</button>

<button id="stopCamera" disabled>⏹ Stop Camera</button>

</div>

</div>

<div class="analysis-section">

<h3 style="color: #333; margin-bottom: 15px; text-align: center;">Choose Analysis Type:</h3>

<div class="analysis-types">

<div class="analysis-type selected" data-type="accessibility">

<h3>♿ Accessibility</h3>

<p>Description for visually impaired users</p>

</div>

<div class="analysis-type" data-type="ocr">

<h3>🔍 OCR Processing</h3>

<p>Advanced text extraction using local OCR</p>

</div>

</div>

<div id="results" class="results">

<p style="color: #666; text-align: center;">Start your camera and capture a photo to see AI analysis results here.</p>

</div>

</div>

</div>

<script>

class AICameraTool {

constructor() {

this.video = document.getElementById('video');

this.canvas = document.getElementById('canvas');

this.ctx = this.canvas.getContext('2d');

this.results = document.getElementById('results');

this.currentAnalysisType = 'accessibility';

this.stream = null;

this.initializeEventListeners();

}

initializeEventListeners() {

document.getElementById('startCamera').addEventListener('click', () => this.startCamera());

document.getElementById('capturePhoto').addEventListener('click', () => this.captureAndAnalyze());

document.getElementById('stopCamera').addEventListener('click', () => this.stopCamera());

document.querySelectorAll('.analysis-type').forEach(type => {

type.addEventListener('click', (e) => this.selectAnalysisType(e.target.closest('.analysis-type')));

});

}

async startCamera() {

try {

this.stream = await navigator.mediaDevices.getUserMedia({

video: {

width: { ideal: 640 },

height: { ideal: 480 },

facingMode: 'environment'

}

});

this.video.srcObject = this.stream;

document.getElementById('startCamera').disabled = true;

document.getElementById('capturePhoto').disabled = false;

document.getElementById('stopCamera').disabled = false;

this.showMessage('📹 Camera started successfully!', 'success');

} catch (error) {

console.error('Error accessing camera:', error);

this.showMessage('❌ Could not access camera. Please check permissions.', 'error');

}

}

stopCamera() {

if (this.stream) {

this.stream.getTracks().forEach(track => track.stop());

this.video.srcObject = null;

this.stream = null;

}

document.getElementById('startCamera').disabled = false;

document.getElementById('capturePhoto').disabled = true;

document.getElementById('stopCamera').disabled = true;

this.showMessage('Camera stopped.', 'success');

}

selectAnalysisType(element) {

document.querySelectorAll('.analysis-type').forEach(type => {

type.classList.remove('selected');

});

element.classList.add('selected');

this.currentAnalysisType = element.dataset.type;

}

captureAndAnalyze() {

// Set canvas dimensions to match video

this.canvas.width = this.video.videoWidth;

this.canvas.height = this.video.videoHeight;

// Draw current video frame to canvas

this.ctx.drawImage(this.video, 0, 0);

// Convert to base64

const imageData = this.canvas.toDataURL('image/jpeg', 0.8);

this.analyzeImage(imageData);

}

async analyzeImage(imageData) {

this.showLoading();

try {

if (this.currentAnalysisType === 'ocr') {

await this.performOCR(imageData);

} else {

await this.simulateAIAnalysis(imageData);

}

} catch (error) {

console.error('Analysis error:', error);

this.showMessage('❌ Analysis failed. Please try again.', 'error');

}

}

async performOCR(imageData) {

try {

const response = await fetch('http://127.0.0.1:5001/ocr', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

image: imageData

})

});

if (!response.ok) {

throw new Error(`OCR service error: ${response.statusText}`);

}

const result = await response.json();

this.displayOCRResults(result);

} catch (error) {

console.error('OCR error:', error);

this.showMessage(`❌ OCR failed: ${error.message}. Make sure the OCR server is running.`, 'error');

}

}

displayOCRResults(result) {

if (result.success) {

const hasText = result.text_found;

const extractedText = result.text || "No text detected in the image.";

this.results.innerHTML = `

<div style="border-bottom: 1px solid #e1e8ff; padding-bottom: 15px; margin-bottom: 15px;">

<div style="display: flex; justify-content: space-between; align-items: center; margin-bottom: 10px;">

<strong style="color: #333;">OCR Results</strong>

<span style="background: ${hasText ? '#27ae60' : '#f39c12'}; color: white; padding: 4px 12px; border-radius: 20px; font-size: 0.9em;">

${result.confidence} confidence

</span>

</div>

<div style="color: #666; font-size: 0.9em;">

${result.processing_info.image_size} • ${result.processing_info.preprocessing}

</div>

</div>

<div style="margin-bottom: 20px;">

<h4 style="color: #333; margin-bottom: 10px;">📝 Extracted Text:</h4>

<div style="background: #f8f9ff; border: 2px solid #e1e8ff; border-radius: 10px; padding: 15px; font-family: 'Courier New', monospace; line-height: 1.5; min-height: 60px;">

${hasText ? extractedText.replace(/\n/g, '<br>') : '<span style="color: #999; font-style: italic;">No text found in this image</span>'}

</div>

</div>

${hasText ? `

<div style="margin-bottom: 15px;">

<button onclick="navigator.clipboard.writeText('${extractedText.replace(/'/g, "\\'")}').then(() => alert('Text copied to clipboard!'))"

style="background: #27ae60; color: white; border: none; padding: 8px 16px; border-radius: 20px; cursor: pointer; font-size: 14px;">

📋 Copy Text

</button>

<button onclick="speechSynthesis.speak(new SpeechSynthesisUtterance('${extractedText.replace(/'/g, "\\'").replace(/"/g, '\\"')}'))"

style="background: #3498db; color: white; border: none; padding: 8px 16px; border-radius: 20px; cursor: pointer; font-size: 14px; margin-left: 10px;">

🔊 Read Aloud

</button>

</div>

` : ''}

<div style="padding: 15px; background: #f0f7ff; border-radius: 10px; border-left: 4px solid #2196F3;">

<strong>💡 OCR Processing:</strong> This uses advanced image preprocessing and Tesseract OCR for text extraction.

<div style="margin-top: 8px; font-size: 0.9em; color: #555;">

✓ Grayscale conversion<br>

✓ Noise reduction<br>

✓ Adaptive thresholding<br>

✓ Multiple OCR configurations

</div>

</div>

`;

} else {

this.showMessage(`❌ OCR Error: ${result.error}`, 'error');

}

}

async simulateAIAnalysis(imageData) {

// Simulate API call delay

await new Promise(resolve => setTimeout(resolve, 2000));

const analysisPrompts = {

accessibility: "For accessibility: This tool would provide detailed spatial descriptions, identify potential obstacles or navigation aids, describe people's positions and actions, read any visible text aloud, and highlight important visual information that would help visually impaired users understand their environment."

};

const mockResults = {

confidence: Math.floor(Math.random() * 20) + 80,

timestamp: new Date().toLocaleTimeString(),

analysis: analysisPrompts[this.currentAnalysisType]

};

this.displayResults(mockResults);

}

displayResults(results) {

this.results.innerHTML = `

<div style="border-bottom: 1px solid #e1e8ff; padding-bottom: 15px; margin-bottom: 15px;">

<div style="display: flex; justify-content: space-between; align-items: center; margin-bottom: 10px;">

<strong style="color: #333;">Analysis Results</strong>

<span style="background: #667eea; color: white; padding: 4px 12px; border-radius: 20px; font-size: 0.9em;">

${results.confidence}% confidence

</span>

</div>

<div style="color: #666; font-size: 0.9em;">

Captured at ${results.timestamp}

</div>

</div>

<div style="line-height: 1.6; color: #444;">

${results.analysis}

</div>

<div style="margin-top: 20px; padding: 15px; background: #f0f7ff; border-radius: 10px; border-left: 4px solid #2196F3;">

<strong>💡 Implementation Note:</strong> To make this fully functional, you would integrate with:

<ul style="margin: 10px 0 0 20px; color: #555;">

<li>OpenAI Vision API for detailed scene analysis</li>

<li>Google Cloud Vision for object and text detection</li>

<li>Custom ML models for specialized accessibility features</li>

<li>Text-to-speech for audio feedback</li>

</ul>

</div>

`;

}

showLoading() {

this.results.innerHTML = `

<div class="loading">

<div class="spinner"></div>

<span>Analyzing image with AI...</span>

</div>

`;

}

showMessage(message, type) {

const className = type === 'error' ? 'error' : 'success';

this.results.innerHTML = `<div class="${className}">${message}</div>`;

}

}

// Initialize the camera tool when page loads

document.addEventListener('DOMContentLoaded', () => {

new AICameraTool();

});

</script>

</body>

</html>This has a lot of bloat; it has excess models when I was initially building the model, with the general description, safety analysis and the text recognition being duplicated. So to fix all these small issues I have removed them.

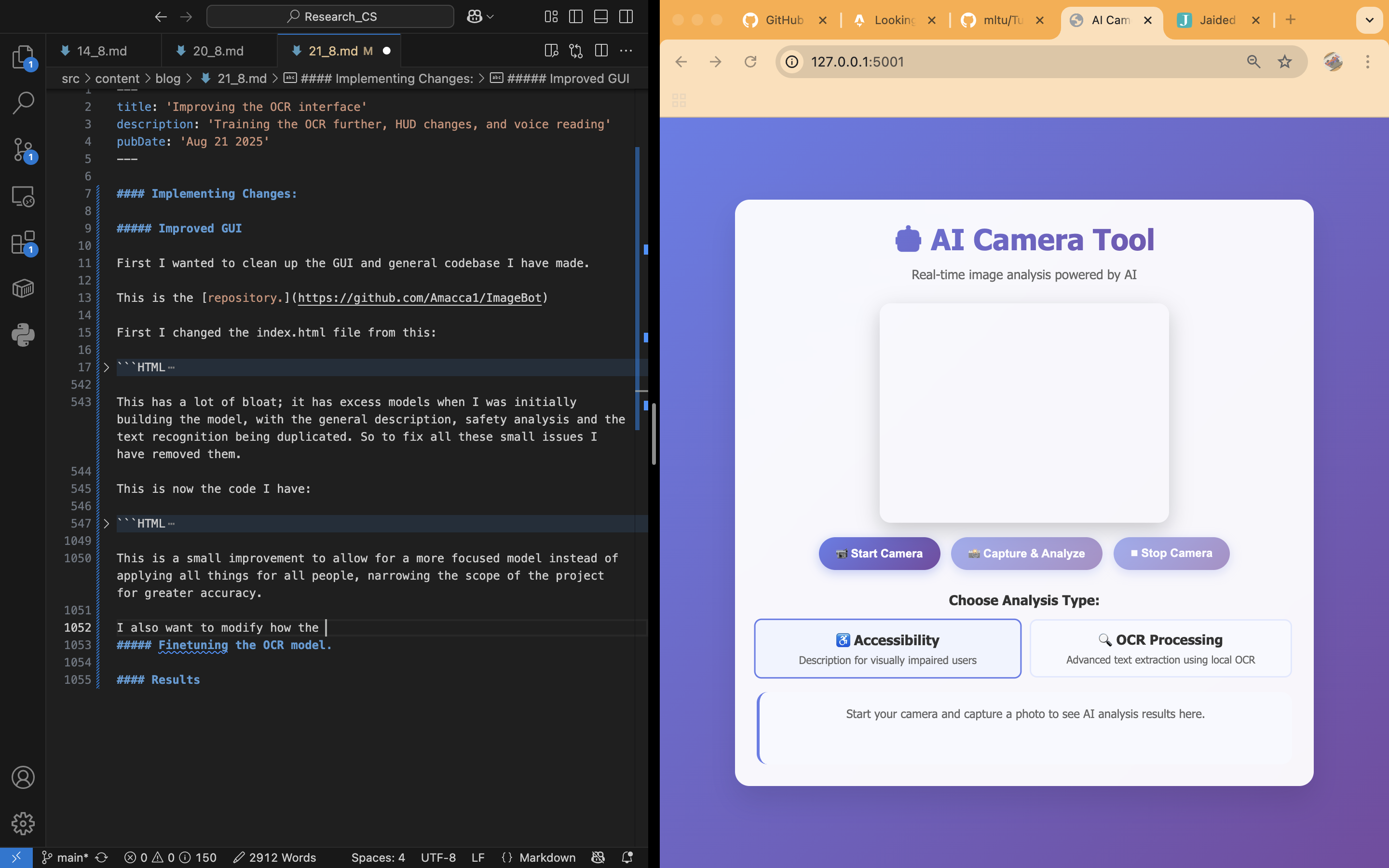

This is now the code I have:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>AI Camera Tool</title>

<style>

* {

margin: 0;

padding: 0;

box-sizing: border-box;

}

body {

font-family: 'Segoe UI', Tahoma, Geneva, Verdana, sans-serif;

background: linear-gradient(135deg, #667eea 0%, #764ba2 100%);

min-height: 100vh;

display: flex;

align-items: center;

justify-content: center;

padding: 20px;

}

.container {

background: rgba(255, 255, 255, 0.95);

backdrop-filter: blur(10px);

border-radius: 20px;

padding: 30px;

box-shadow: 0 20px 40px rgba(0, 0, 0, 0.1);

max-width: 800px;

width: 100%;

}

.header {

text-align: center;

margin-bottom: 30px;

}

h1 {

color: #333;

margin-bottom: 10px;

font-size: 2.5em;

background: linear-gradient(135deg, #667eea, #764ba2);

-webkit-background-clip: text;

-webkit-text-fill-color: transparent;

}

.subtitle {

color: #666;

font-size: 1.1em;

}

.camera-section {

display: flex;

flex-direction: column;

align-items: center;

gap: 20px;

margin-bottom: 30px;

}

.video-container {

position: relative;

border-radius: 15px;

overflow: hidden;

box-shadow: 0 10px 30px rgba(0, 0, 0, 0.2);

}

video {

max-width: 100%;

width: 400px;

height: 300px;

object-fit: cover;

}

canvas {

display: none;

}

.controls {

display: flex;

gap: 15px;

flex-wrap: wrap;

justify-content: center;

}

button {

background: linear-gradient(135deg, #667eea, #764ba2);

color: white;

border: none;

padding: 12px 24px;

border-radius: 25px;

cursor: pointer;

font-size: 16px;

font-weight: 600;

transition: all 0.3s ease;

box-shadow: 0 4px 15px rgba(102, 126, 234, 0.4);

}

button:hover {

transform: translateY(-2px);

box-shadow: 0 6px 20px rgba(102, 126, 234, 0.6);

}

button:disabled {

opacity: 0.6;

cursor: not-allowed;

transform: none;

}

.analysis-section {

margin-top: 30px;

}

.analysis-types {

display: grid;

grid-template-columns: repeat(auto-fit, minmax(200px, 1fr));

gap: 15px;

margin-bottom: 20px;

}

.analysis-type {

background: #f8f9ff;

border: 2px solid #e1e8ff;

border-radius: 10px;

padding: 15px;

cursor: pointer;

transition: all 0.3s ease;

text-align: center;

}

.analysis-type:hover, .analysis-type.selected {

border-color: #667eea;

background: #f0f4ff;

transform: scale(1.02);

}

.analysis-type h3 {

color: #333;

margin-bottom: 5px;

}

.analysis-type p {

color: #666;

font-size: 0.9em;

}

.results {

background: #f8f9ff;

border-radius: 15px;

padding: 20px;

margin-top: 20px;

min-height: 100px;

border-left: 4px solid #667eea;

}

.loading {

display: flex;

align-items: center;

justify-content: center;

gap: 10px;

color: #667eea;

}

.spinner {

width: 20px;

height: 20px;

border: 2px solid #e1e8ff;

border-top: 2px solid #667eea;

border-radius: 50%;

animation: spin 1s linear infinite;

}

@keyframes spin {

0% { transform: rotate(0deg); }

100% { transform: rotate(360deg); }

}

.error {

color: #e74c3c;

text-align: center;

padding: 20px;

}

.success {

color: #27ae60;

text-align: center;

margin-bottom: 20px;

}

@media (max-width: 600px) {

.container {

padding: 20px;

}

h1 {

font-size: 2em;

}

video {

width: 100%;

height: 200px;

}

.controls {

flex-direction: column;

align-items: center;

}

button {

width: 200px;

}

}

</style>

</head>

<body>

<div class="container">

<div class="header">

<h1>🤖 AI Camera Tool</h1>

<p class="subtitle">Real-time image analysis powered by AI</p>

</div>

<div class="camera-section">

<div class="video-container">

<video id="video" autoplay muted playsinline></video>

<canvas id="canvas"></canvas>

</div>

<div class="controls">

<button id="startCamera">📹 Start Camera</button>

<button id="capturePhoto" disabled>📸 Capture & Analyze</button>

<button id="stopCamera" disabled>⏹ Stop Camera</button>

</div>

</div>

<div class="analysis-section">

<h3 style="color: #333; margin-bottom: 15px; text-align: center;">Choose Analysis Type:</h3>

<div class="analysis-types">

<div class="analysis-type selected" data-type="accessibility">

<h3>♿ Accessibility</h3>

<p>Description for visually impaired users</p>

</div>

<div class="analysis-type" data-type="ocr">

<h3>🔍 OCR Processing</h3>

<p>Advanced text extraction using local OCR</p>

</div>

</div>

<div id="results" class="results">

<p style="color: #666; text-align: center;">Start your camera and capture a photo to see AI analysis results here.</p>

</div>

</div>

</div>

<script>

class AICameraTool {

constructor() {

this.video = document.getElementById('video');

this.canvas = document.getElementById('canvas');

this.ctx = this.canvas.getContext('2d');

this.results = document.getElementById('results');

this.currentAnalysisType = 'accessibility';

this.stream = null;

this.initializeEventListeners();

}

initializeEventListeners() {

document.getElementById('startCamera').addEventListener('click', () => this.startCamera());

document.getElementById('capturePhoto').addEventListener('click', () => this.captureAndAnalyze());

document.getElementById('stopCamera').addEventListener('click', () => this.stopCamera());

document.querySelectorAll('.analysis-type').forEach(type => {

type.addEventListener('click', (e) => this.selectAnalysisType(e.target.closest('.analysis-type')));

});

}

async startCamera() {

try {

this.stream = await navigator.mediaDevices.getUserMedia({

video: {

width: { ideal: 640 },

height: { ideal: 480 },

facingMode: 'environment'

}

});

this.video.srcObject = this.stream;

document.getElementById('startCamera').disabled = true;

document.getElementById('capturePhoto').disabled = false;

document.getElementById('stopCamera').disabled = false;

this.showMessage('📹 Camera started successfully!', 'success');

} catch (error) {

console.error('Error accessing camera:', error);

this.showMessage('❌ Could not access camera. Please check permissions.', 'error');

}

}

stopCamera() {

if (this.stream) {

this.stream.getTracks().forEach(track => track.stop());

this.video.srcObject = null;

this.stream = null;

}

document.getElementById('startCamera').disabled = false;

document.getElementById('capturePhoto').disabled = true;

document.getElementById('stopCamera').disabled = true;

this.showMessage('Camera stopped.', 'success');

}

selectAnalysisType(element) {

document.querySelectorAll('.analysis-type').forEach(type => {

type.classList.remove('selected');

});

element.classList.add('selected');

this.currentAnalysisType = element.dataset.type;

}

captureAndAnalyze() {

// Set canvas dimensions to match video

this.canvas.width = this.video.videoWidth;

this.canvas.height = this.video.videoHeight;

// Draw current video frame to canvas

this.ctx.drawImage(this.video, 0, 0);

// Convert to base64

const imageData = this.canvas.toDataURL('image/jpeg', 0.8);

this.analyzeImage(imageData);

}

async analyzeImage(imageData) {

this.showLoading();

try {

if (this.currentAnalysisType === 'ocr') {

await this.performOCR(imageData);

} else {

await this.simulateAIAnalysis(imageData);

}

} catch (error) {

console.error('Analysis error:', error);

this.showMessage('❌ Analysis failed. Please try again.', 'error');

}

}

async performOCR(imageData) {

try {

const response = await fetch('http://127.0.0.1:5001/ocr', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

image: imageData

})

});

if (!response.ok) {

throw new Error(`OCR service error: ${response.statusText}`);

}

const result = await response.json();

this.displayOCRResults(result);

} catch (error) {

console.error('OCR error:', error);

this.showMessage(`❌ OCR failed: ${error.message}. Make sure the OCR server is running.`, 'error');

}

}

displayOCRResults(result) {

if (result.success) {

const hasText = result.text_found;

const extractedText = result.text || "No text detected in the image.";

this.results.innerHTML = `

<div style="border-bottom: 1px solid #e1e8ff; padding-bottom: 15px; margin-bottom: 15px;">

<div style="display: flex; justify-content: space-between; align-items: center; margin-bottom: 10px;">

<strong style="color: #333;">OCR Results</strong>

<span style="background: ${hasText ? '#27ae60' : '#f39c12'}; color: white; padding: 4px 12px; border-radius: 20px; font-size: 0.9em;">

${result.confidence} confidence

</span>

</div>

<div style="color: #666; font-size: 0.9em;">

${result.processing_info.image_size} • ${result.processing_info.preprocessing}

</div>

</div>

<div style="margin-bottom: 20px;">

<h4 style="color: #333; margin-bottom: 10px;">📝 Extracted Text:</h4>

<div style="background: #f8f9ff; border: 2px solid #e1e8ff; border-radius: 10px; padding: 15px; font-family: 'Courier New', monospace; line-height: 1.5; min-height: 60px;">

${hasText ? extractedText.replace(/\n/g, '<br>') : '<span style="color: #999; font-style: italic;">No text found in this image</span>'}

</div>

</div>

${hasText ? `

<div style="margin-bottom: 15px;">

<button onclick="navigator.clipboard.writeText('${extractedText.replace(/'/g, "\\'")}').then(() => alert('Text copied to clipboard!'))"

style="background: #27ae60; color: white; border: none; padding: 8px 16px; border-radius: 20px; cursor: pointer; font-size: 14px;">

📋 Copy Text

</button>

<button onclick="speechSynthesis.speak(new SpeechSynthesisUtterance('${extractedText.replace(/'/g, "\\'").replace(/"/g, '\\"')}'))"

style="background: #3498db; color: white; border: none; padding: 8px 16px; border-radius: 20px; cursor: pointer; font-size: 14px; margin-left: 10px;">

🔊 Read Aloud

</button>

</div>

` : ''}

<div style="padding: 15px; background: #f0f7ff; border-radius: 10px; border-left: 4px solid #2196F3;">

<strong>💡 OCR Processing:</strong> This uses advanced image preprocessing and Tesseract OCR for text extraction.

<div style="margin-top: 8px; font-size: 0.9em; color: #555;">

✓ Grayscale conversion<br>

✓ Noise reduction<br>

✓ Adaptive thresholding<br>

✓ Multiple OCR configurations

</div>

</div>

`;

} else {

this.showMessage(`❌ OCR Error: ${result.error}`, 'error');

}

}

async simulateAIAnalysis(imageData) {

// Simulate API call delay

await new Promise(resolve => setTimeout(resolve, 2000));

const analysisPrompts = {

accessibility: "For accessibility: This tool would provide detailed spatial descriptions, identify potential obstacles or navigation aids, describe people's positions and actions, read any visible text aloud, and highlight important visual information that would help visually impaired users understand their environment."

};

const mockResults = {

confidence: Math.floor(Math.random() * 20) + 80,

timestamp: new Date().toLocaleTimeString(),

analysis: analysisPrompts[this.currentAnalysisType]

};

this.displayResults(mockResults);

}

displayResults(results) {

this.results.innerHTML = `

<div style="border-bottom: 1px solid #e1e8ff; padding-bottom: 15px; margin-bottom: 15px;">

<div style="display: flex; justify-content: space-between; align-items: center; margin-bottom: 10px;">

<strong style="color: #333;">Analysis Results</strong>

<span style="background: #667eea; color: white; padding: 4px 12px; border-radius: 20px; font-size: 0.9em;">

${results.confidence}% confidence

</span>

</div>

<div style="color: #666; font-size: 0.9em;">

Captured at ${results.timestamp}

</div>

</div>

<div style="line-height: 1.6; color: #444;">

${results.analysis}

</div>

<div style="margin-top: 20px; padding: 15px; background: #f0f7ff; border-radius: 10px; border-left: 4px solid #2196F3;">

<strong>💡 Implementation Note:</strong> To make this fully functional, you would integrate with:

<ul style="margin: 10px 0 0 20px; color: #555;">

<li>OpenAI Vision API for detailed scene analysis</li>

<li>Google Cloud Vision for object and text detection</li>

<li>Custom ML models for specialized accessibility features</li>

<li>Text-to-speech for audio feedback</li>

</ul>

</div>

`;

}

showLoading() {

this.results.innerHTML = `

<div class="loading">

<div class="spinner"></div>

<span>Analyzing image with AI...</span>

</div>

`;

}

showMessage(message, type) {

const className = type === 'error' ? 'error' : 'success';

this.results.innerHTML = `<div class="${className}">${message}</div>`;

}

}

// Initialize the camera tool when page loads

document.addEventListener('DOMContentLoaded', () => {

new AICameraTool();

});

</script>

</body>

</html>This is a small improvement to allow for a more focused model instead of applying all things for all people, narrowing the scope of the project for greater accuracy.

I also want to modify how the model can take in information. I also want to add a file adder for extra functionality.

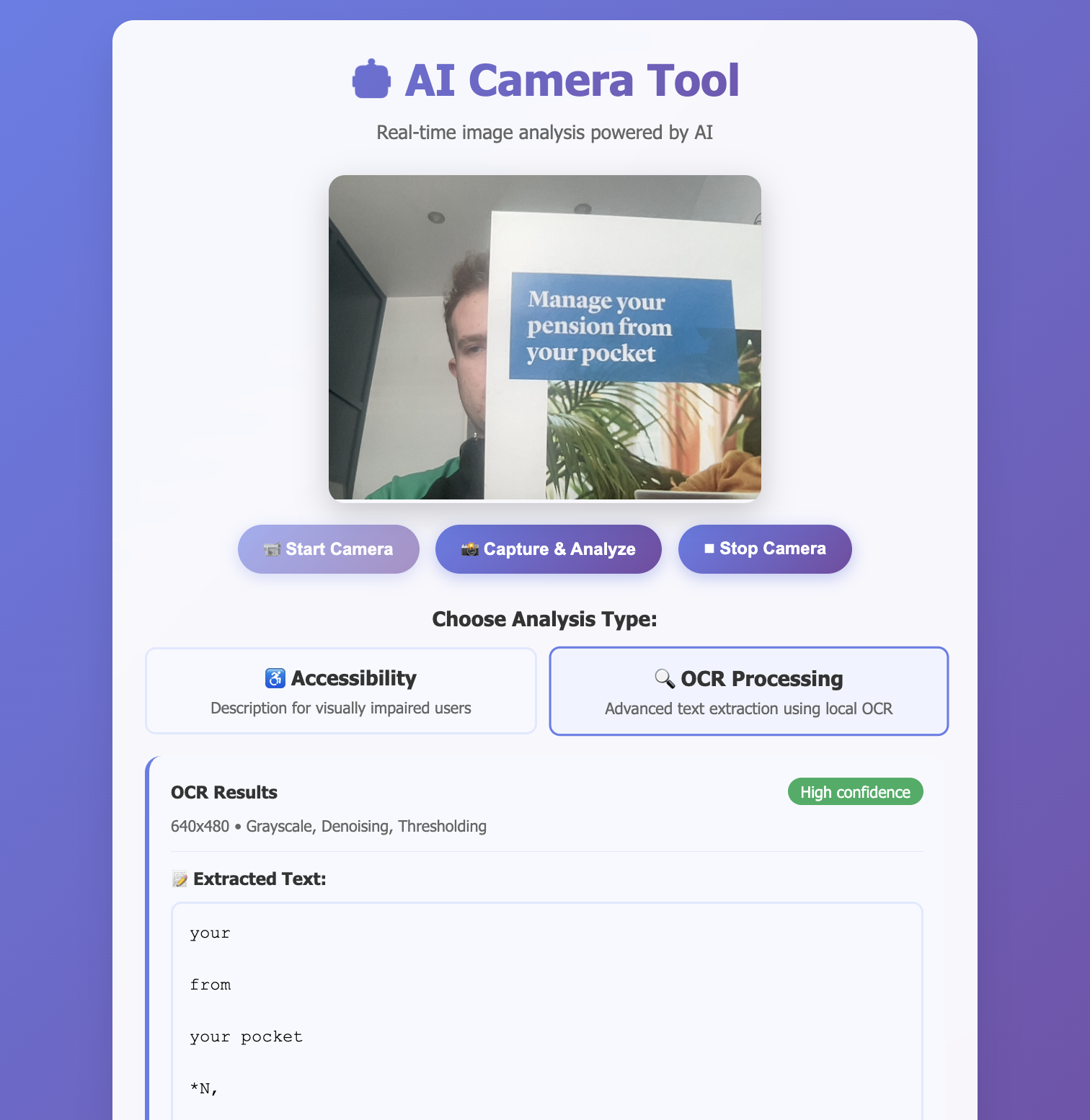

It now looks like this:

Improving Accessibility with ClaudeAPI

I am also going to modify how the accessibility works, and make it work to an efficient level. My current design will most likely need to be tweaked to allow an API to work out what to write as a description. This is the new and updated backend:

from flask import Flask, request, jsonify, render_template

from flask_cors import CORS

import cv2

import pytesseract

import numpy as np

import base64

from PIL import Image

import io

import os

import easyocr

import anthropic

app = Flask(__name__)

CORS(app)

try:

ocr_reader = easyocr.Reader(['en'])

print("EasyOCR initialized successfully")

except Exception as e:

print(f"EasyOCR initialization failed: {e}")

ocr_reader = None

try:

claude_client = anthropic.Anthropic(

api_key=os.getenv('ANTHROPIC_API_KEY')

)

print("Claude client initialized successfully")

except Exception as e:

print(f"Claude initialization failed: {e}")

claude_client = None

def preprocess_image(image):

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

denoised = cv2.medianBlur(gray, 5)

_, thresh = cv2.threshold(denoised, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)

return thresh

def easyocr_extract(image):

try:

if ocr_reader is None:

return ""

results = ocr_reader.readtext(image)

extracted_texts = []

for (bbox, text, confidence) in results:

if confidence > 0.5:

extracted_texts.append(text)

return ' '.join(extracted_texts)

except Exception as e:

print(f"EasyOCR error: {e}")

return ""

def ocr_core(img):

try:

configs = [

'--oem 3 --psm 6',

'--oem 3 --psm 8',

'--oem 3 --psm 7',

'--oem 3 --psm 11',

'--oem 3 --psm 13'

]

results = []

for config in configs:

try:

text = pytesseract.image_to_string(img, config=config)

if text.strip():

results.append(text.strip())

except:

continue

if results:

return max(results, key=len)

else:

return ""

except Exception as e:

print(f"OCR error: {e}")

return ""

def claude_describe_image(base64_string):

try:

if claude_client is None:

return "Claude client not available. Please check your API key."

if ',' in base64_string:

base64_string = base64_string.split(',')[1]

message = claude_client.messages.create(

model="claude-opus-4-1-20250805",

max_tokens=1000,

messages=[

{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": "image/jpeg",

"data": base64_string

}

},

{

"type": "text",

"text": "Please provide a detailed description of this image for accessibility purposes. Focus on: 1) Overall scene and setting, 2) People and their activities, 3) Objects and their locations, 4) Any text visible in the image, 5) Colors and visual elements that would help someone understand the image. Be specific and descriptive."

}

]

}

]

)

return message.content[0].text

except Exception as e:

print(f"Claude description error: {e}")

return f"Error generating description: {str(e)}"

def base64_to_image(base64_string):

try:

if ',' in base64_string:

base64_string = base64_string.split(',')[1]

img_data = base64.b64decode(base64_string)

pil_image = Image.open(io.BytesIO(img_data))

opencv_image = cv2.cvtColor(np.array(pil_image), cv2.COLOR_RGB2BGR)

return opencv_image

except Exception as e:

print(f"Base64 conversion error: {e}")

return None

@app.route('/', methods=['GET'])

def home():

return render_template('index.html')

@app.route('/ocr', methods=['POST', 'OPTIONS'])

def process_ocr():

if request.method == 'OPTIONS':

return jsonify({"status": "OK"}), 200

try:

print(f"Received OCR request from {request.remote_addr}")

data = request.get_json()

if not data:

print("No JSON data received")

return jsonify({"error": "No JSON data provided"}), 400

if 'image' not in data:

print("No image field in JSON data")

return jsonify({"error": "No image data provided"}), 400

print(f"Processing image data of length: {len(data['image'])}")

image = base64_to_image(data['image'])

if image is None:

print("Failed to convert base64 to image")

return jsonify({"error": "Invalid image data"}), 400

print(f"Image converted successfully: {image.shape}")

extracted_text = easyocr_extract(image)

if not extracted_text:

print("EasyOCR failed, trying Tesseract...")

processed_image = preprocess_image(image)

extracted_text = ocr_core(processed_image)

print(f"OCR completed. Text length: {len(extracted_text)}")

response = {

"success": True,

"text": extracted_text,

"text_found": bool(extracted_text.strip()),

"confidence": "High" if len(extracted_text.strip()) > 10 else "Medium" if extracted_text.strip() else "Low",

"processing_info": {

"image_size": f"{image.shape[1]}x{image.shape[0]}",

"preprocessing": "EasyOCR + Tesseract fallback"

}

}

print("Sending response back to client")

return jsonify(response)

except Exception as e:

print(f"OCR processing error: {e}")

import traceback

traceback.print_exc()

return jsonify({"error": f"OCR processing failed: {str(e)}"}), 500

@app.route('/describe', methods=['POST', 'OPTIONS'])

def process_description():

if request.method == 'OPTIONS':

return jsonify({"status": "OK"}), 200

try:

print(f"Received description request from {request.remote_addr}")

data = request.get_json()

if not data:

print("No JSON data received")

return jsonify({"error": "No JSON data provided"}), 400

if 'image' not in data:

print("No image field in JSON data")

return jsonify({"error": "No image data provided"}), 400

print(f"Processing image data for description")

description = claude_describe_image(data['image'])

print(f"Description completed. Length: {len(description)}")

response = {

"success": True,

"description": description,

"timestamp": f"Generated at {__import__('datetime').datetime.now().strftime('%H:%M:%S')}",

"service": "Claude Sonnet 3"

}

print("Sending description response back to client")

return jsonify(response)

except Exception as e:

print(f"Description processing error: {e}")

import traceback

traceback.print_exc()

return jsonify({"error": f"Description failed: {str(e)}"}), 500

if __name__ == '__main__':

try:

version = pytesseract.get_tesseract_version()

print(f"Tesseract version: {version}")

except:

print("WARNING: Tesseract not found. Please install it first.")

if ocr_reader is None:

print("WARNING: EasyOCR not available. Install with: pip install easyocr")

if claude_client is None:

print("WARNING: Claude not available. Set ANTHROPIC_API_KEY environment variable")

print("\nServer starting on http://127.0.0.1:5001")

print("Available endpoints:")

print("- / : Web interface")

print("- /ocr : Text extraction (EasyOCR + Tesseract)")

print("- /describe : Image description (Claude)")

app.run(debug=True, host='127.0.0.1', port=5001)

As of right now, the backend of the server is very basic, with it being a simple flask program in a development server. This has allowed me to do simple tasks with it, but in future I will look at moving it into a production server. I have previously used a few ways to do this before, but the production server I landed on was gunicorn, and I uploaded previous work via fly.io: (see my work on my neo4j knowledge graph webapp here!). This will most likely work similarly. In future I will also look at developing that further, to allow for a JSON file to be added for connections and some general functionality and aesthetic design tweaks. (I will link it here in future).

Text to Speech

I have also added a text to speech function via the natural speech synthesis embedded in the website. It comes in 2 parts, the function that does the functionality of the text to speech, and the route that allows it to be used in the webpage:

def speak_text(text):

"""

Convert text to speech using pyttsx3

"""

try:

if tts_engine is None:

return False

def run_tts():

tts_engine.say(text)

tts_engine.runAndWait()

thread = threading.Thread(target=run_tts)

thread.daemon = True

thread.start()

return True

except Exception as e:

print(f"TTS error: {e}")

return False@app.route('/speak', methods=['POST', 'OPTIONS'])

def text_to_speech():

if request.method == 'OPTIONS':

return jsonify({"status": "OK"}), 200

try:

print(f"Received TTS request from {request.remote_addr}")

data = request.get_json()

if not data:

return jsonify({"error": "No JSON data provided"}), 400

if 'text' not in data:

return jsonify({"error": "No text provided"}), 400

text = data['text'].strip()

if not text:

return jsonify({"error": "Empty text provided"}), 400

print(f"Converting text to speech: {text[:50]}...")

success = speak_text(text)

if success:

response = {

"success": True,

"message": "Text-to-speech started successfully",

"text_length": len(text)

}

else:

response = {

"success": False,

"error": "Text-to-speech engine not available"

}

return jsonify(response)

except Exception as e:

print(f"TTS processing error: {e}")

import traceback

traceback.print_exc()

return jsonify({"error": f"TTS failed: {str(e)}"}), 500The frontend also got slightly tweaked to allow the speech to work:

async speakText(text) {

console.log('Speaking text:', text.substring(0, 50) + '...');

try {

if ('speechSynthesis' in window) {

speechSynthesis.cancel();

const utterance = new SpeechSynthesisUtterance(text);

utterance.rate = 0.8;

utterance.volume = 1.0;

utterance.pitch = 1.0;

const voices = speechSynthesis.getVoices();

console.log('Available voices:', voices.length);

const englishVoice = voices.find(voice =>

voice.lang.startsWith('en')

);

if (englishVoice) {

utterance.voice = englishVoice;

console.log('Using voice:', englishVoice.name);

}

utterance.onstart = () => {

console.log('✅ Speech started successfully');

};

utterance.onend = () => {

console.log('✅ Speech ended');

};

utterance.onerror = (event) => {

console.error('❌ Speech error:', event.error);

alert('Speech synthesis error: ' + event.error);

};

speechSynthesis.speak(utterance);

} else {

alert('Speech synthesis not supported in this browser');

}

} catch (error) {

console.error('TTS error:', error);

alert('Text-to-speech failed: ' + error.message);

}

}Results

Overall, the technical challenges pushed me to explore technologies I hadn’t worked with before. Integrating Claude’s vision API was interesting - seeing how accurately it could describe complex scenes was genuinely impressive. The API consistently provided rich, detailed descriptions that went far beyond simple object detection, better than any training I made ever produced.

One of the biggest surprises was how much effort went into getting reliable OCR results. I initially started with just Tesseract, but found that it struggled with certain types of images - especially those with complex layouts or poor lighting. That’s when I decided to implement a dual-engine approach.

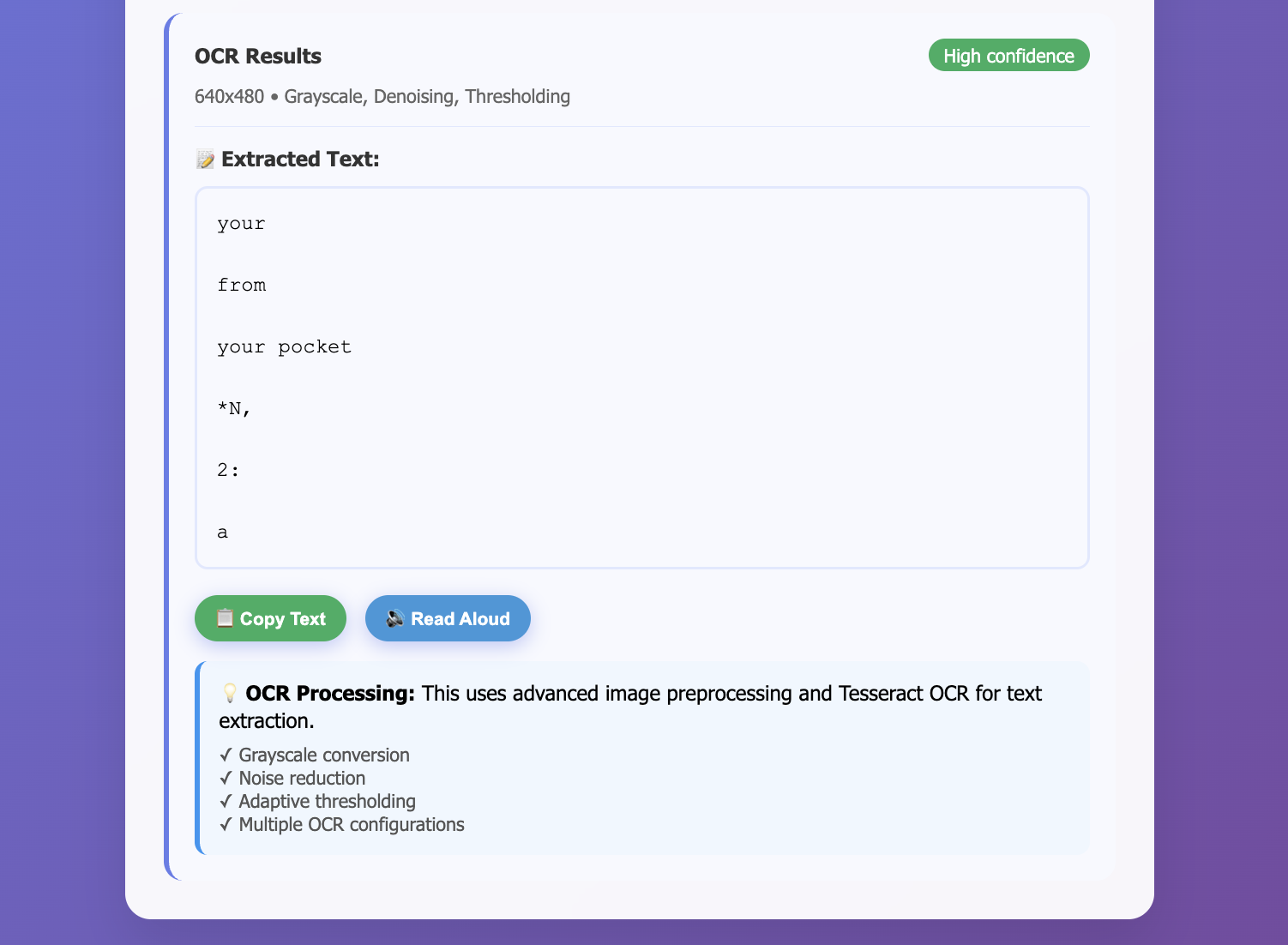

The hybrid OCR system was probably my biggest technical win. EasyOCR, being neural network-based, handles modern text and varied fonts much better, while Tesseract excels with traditional documents. By using EasyOCR as the primary engine and falling back to Tesseract when needed, I achieved much more reliable results across different image types.

Another interesting challenge was the text-to-speech implementation. I originally planned to rely entirely on server-side TTS using pyttsx3, but discovered that browser-based speech synthesis actually provides a better user experience - it’s faster, doesn’t require network calls, and users can control playback with their system settings. So I flipped the architecture to use browser TTS as primary with server-side as backup.

Looking back, there are a few things I’d approach differently in a future project. I spent too much time early on trying to perfect the Tesseract configurations before discovering EasyOCR, which ended up being the better primary solution. If I were starting over, I’d research the latest OCR technologies first rather than defaulting to the most well-known option. I would also possibly consider attempting to custom make an OCR if I had better access to both better hardware and better/more training data.

I also underestimated how much work would go into the accessibility features. Making something truly accessible isn’t just about adding alternate text - it requires rethinking the entire interaction model. This was valuable learning, but it definitely extended the development timeline.